Posts on this page:

- Certificate Autoenrollment in Windows Server 2016 — Summary

- Certificate Autoenrollment in Windows Server 2016 (part 4)

- Certificate Autoenrollment in Windows Server 2016 (part 3)

- Certificate Autoenrollment in Windows Server 2016 (part 2)

- Certificate Autoenrollment in Windows Server 2016 (part 1)

This blog post finishes a Certificate Autoenrollment in Windows Server 2016 blog post series. Here is a list of posts in the series:

First part makes introduction to certificate autoenrollment and describes certificate enrollment architecture in Windows 10 and Windows Server 2016.

Second part explains certificate autoenrollment architecture, its components and detailed processing rules.

Third part provides a step-by-step guide on configuring and utilizing certificate autoenrollment feature.

References

The last part provides information about advanced certificate autoenrollment features, scenarios and troubleshooting guide. Next section contains a list of reference documents used to write this whitepaper:

Read more →

This is a third part of the Certificate Autoenrollment in Windows Server 2016 whitepaper. Other parts:

- Certificate Autoenrollment in Windows Server 2016 (part 1)

- Certificate Autoenrollment in Windows Server 2016 (part 2)

- Certificate Autoenrollment in Windows Server 2016 (part 3)

Configuring Advanced Features

This section discusses templates that require certificate manager approval, self-registration authority, and how to supersede a certificate template.

Requiring certificate manager approval

A specific certificate template can require that a certificate manager (CA officer) approve the request prior to the CA actually signing and issuing the certificate. This advanced security feature works in conjunction with autoenrollment and is enabled on the Issuance Requirements tab of a given certificate template (Figure 25). This setting overrides any pending setting on the CA itself.

Read more →

This is a third part of the Certificate Autoenrollment in Windows Server 2016 whitepaper. Other parts:

- Certificate Autoenrollment in Windows Server 2016 (part 1)

- Certificate Autoenrollment in Windows Server 2016 (part 2)

Configuring Autoenrollment

Autoenrollment configuration in general consist of three steps: configure autoenrollment policy, prepare certificate templates and prepare certificate issuers. Each configuration step is described in next sections.

Configuring autoenrollment policy

The recommended way to configure autoenrollment policy is to use Group Policy feature. Group policy feature is available in both, domain and workgroups environments. This section provides information about autoenrollment configuration using Group Policy editor. It is recommended to turn on autoenrollment policy in both, user and computer configuration.

Read more →

This is a second part of the Certificate Autoenrollment in Windows Server 2016 whitepaper. Other parts:

Certificate Autoenrollment Architecture

This section discusses the autoenrollment architecture, an analysis of the components of the autoenrollment process, and working with certificate authority interfaces.

Autoenrollment internal components

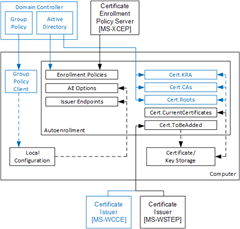

Autoenrollment consist of several components installed on each computer. Depending on environment (Active Directory or workgroup) some components may present or not present. The following diagram outlines autoenrollment components and their high-level interactions in both environments:

The meaning of each component is provided in next sections.

Group Policy client

This component is not available in workgroup environments.

Client module that is responsible for Group Policy retrieval and processing from domain controller, policy storage and policy maintenance on a local computer. Group Policy client updates local configuration with certificate enrollment policy (CEP) information.

Local configuration

System Registry storage that contains information about certificate enrollment policies (CEP). This information is then used to populate configuration for: Enrollment Policies, AE Options and Certificate Issuers components. Local configuration is stored in System Registry in HKLM and HKCU registry hives:

SOFTWARE\Policies\Microsoft\Cryptography\AutoEnrollment\

Enrollment Policies

Contains a collection of CEPs. In Active Directory environment, a LDAP domain policy is added by default. XCEP policies must be configured by an administrator in Group Policy on domain controllers (available only in Active Directory) and/or using local configuration tools. Each policy contains the following notable properties:

Read more →

Hello, everyone! Today I’m starting a new community whitepaper publication on certificate autoenrollment in Windows 10 and Windows Server 2016. This is a deeply rewritten version of the whitepaper published 15 years ago by David B. Cross: Certificate Autoenrollment in Windows XP. Certificate enrollment and autoenrollment was significantly changed since original whitepaper publication. Unfortunately, no efforts were made by Microsoft or community to update the topic. So I put some efforts in exploring the subject and writing a brand-new whitepaper-style document that will cover and reflect all recent changes in certificate autoenrollment subject.

This whitepaper is a structured compilation of a large number of Microsoft official documents and articles from TechNet and MSDN sites. Full reference document list and full-featured printable PDF version will be provided in the last post of this series.

Whitepaper uses the following structure:

- Certificate enrollment architecture

- Certificate autoenrollment architecture

- The autoenrollment process and task sequence

- Autoenrollment configuration

- Certificate autoenrollment in action

- Advanced features

- Troubleshooting

First post of the series will cover only general questions and certificate enrollment architecture. It is important to understand how certificate enrollment works in modern Windows operating systems, because autoenrollment heavily relies on this architecture. So, let’s start!

Read more →